Introduction

It has already been a week since I created the knowledge graph in my local environment and started building the GraphRAG environment. It is finally in a decent working condition. This post is a summary of GraphRAC using Ollama and neo4j that I built in my local environment.

Sources

- Building a local GraphRAG environment with Llama 3.2 and Neo4j - I ran the code in this article.

- ChatOllama .invoke method giving ‘NoneType’ object is not iterable #28287 Following the link in the answer, thus, the workaround is to use a version of ollama earlier than 0.4.0.

Build a knowledge graph

Data (text) to be used as external information

The text extracted from wikipedia in “Creating text data for RAG from Wikipedia dump data” is a large amount of data, so only the following 6 items were extracted. Counting the number of characters in the text extracted by “wc -m,” the number was 15,041.

- R process

- S process

- Hubble Space Telescope

- Nancy Grace Roman Space Telescope

- B2FH paper

- Nuclear Physics

Below is the code to create a knowledge graph from the text.

import required packages

# パッケージをimport

import os

import pprint

from tempfile import NamedTemporaryFile

from langchain_community.document_loaders import TextLoader

from langchain_community.graphs import Neo4jGraph

from langchain_community.vectorstores import Neo4jVector

from langchain_experimental.graph_transformers import LLMGraphTransformer

from neo4j import GraphDatabase

import pandas as pd

Connect to neo4j and create a graph

In my environment, I have neo4j built on a different server than the one running JupyterLab.

# neo4j用の環境変数を設定

os.environ['NEO4J_URI'] = 'bolt://xxx.xxx.xxx.xxx:7687'

os.environ['NEO4J_USERNAME'] = 'neo4j'

os.environ['NEO4J_PASSWORD'] = 'password'

graph = Neo4jGraph()

graph.query('MATCH (n) DETACH DELETE n;')

Read a text file and split into chunks

# Load documents from a text file

WIKI_TEXT = "./wiki_text2"

CHUNK_SIZE = 300

CHUNK_OVERLAP = 30

from langchain.text_splitter import RecursiveCharacterTextSplitter

loader = TextLoader(file_path=WIKI_TEXT, encoding = 'UTF-8')

docs = loader.load()

# Split documents into chunks

text_splitter = RecursiveCharacterTextSplitter(chunk_size=CHUNK_SIZE, chunk_overlap=CHUNK_OVERLAP)

documents = text_splitter.split_documents(documents=docs)

The above “wiki_text2” is a text file extracted from wikipedia.

ollama’s gemma-2b-jpn-it set to LLM

ollama is also running on a different server.

# ollama(gemma-2-2b-jpn)をLLMとして設定

from langchain_ollama.llms import OllamaLLM

llm = OllamaLLM(base_url = "http://xxx.xxx.xxx.xxx:11434", model="schroneko/gemma-2-2b-jpn-it", temperature=0)

Create knowledge graphs from text

llm_transformer = LLMGraphTransformer(llm=llm)

# ここでLLMでgraph化

graph_documents = llm_transformer.convert_to_graph_documents(documents)

graph.add_graph_documents(

graph_documents,

baseEntityLabel=True,

include_source=True

)

pprint.pprint(graph.query("MATCH (s)-[r:!MENTIONS]->(t) RETURN s,r,t LIMIT 50"))

In my environment, it took 374 seconds (about 6 minutes).

Set up embedding and create vector index

from langchain_ollama import OllamaEmbeddings

# ollama embeddings

embeddings = OllamaEmbeddings(

model="mxbai-embed-large",

base_url = "http://192.168.11.4:11434",

)

from langchain.vectorstores import Neo4jVector

vector_index = Neo4jVector.from_existing_graph(

embeddings,

search_type="hybrid",

node_label="Document",

text_node_properties=["text"],

embedding_node_property="embedding"

)

Create full text index

# Connect to the Neo4j, then create a full-text index

driver = GraphDatabase.driver(

uri=os.environ["NEO4J_URI"],

auth=(os.environ["NEO4J_USERNAME"], os.environ["NEO4J_PASSWORD"])

)

def create_fulltext_index(tx):

query = '''

CREATE FULLTEXT INDEX `fulltext_entity_id`

FOR (n:__Entity__)

ON EACH [n.id];

'''

tx.run(query)

try:

with driver.session() as session:

session.execute_write(create_fulltext_index)

print("Fulltext index created successfully.")

except Exception as e:

print(f"Failed to create index: {e}")

driver.close()

When creating a full text index, I got an error message that there was already an index. I connected to neo4j from a browser, dropped the index with cypher, and ran it again.

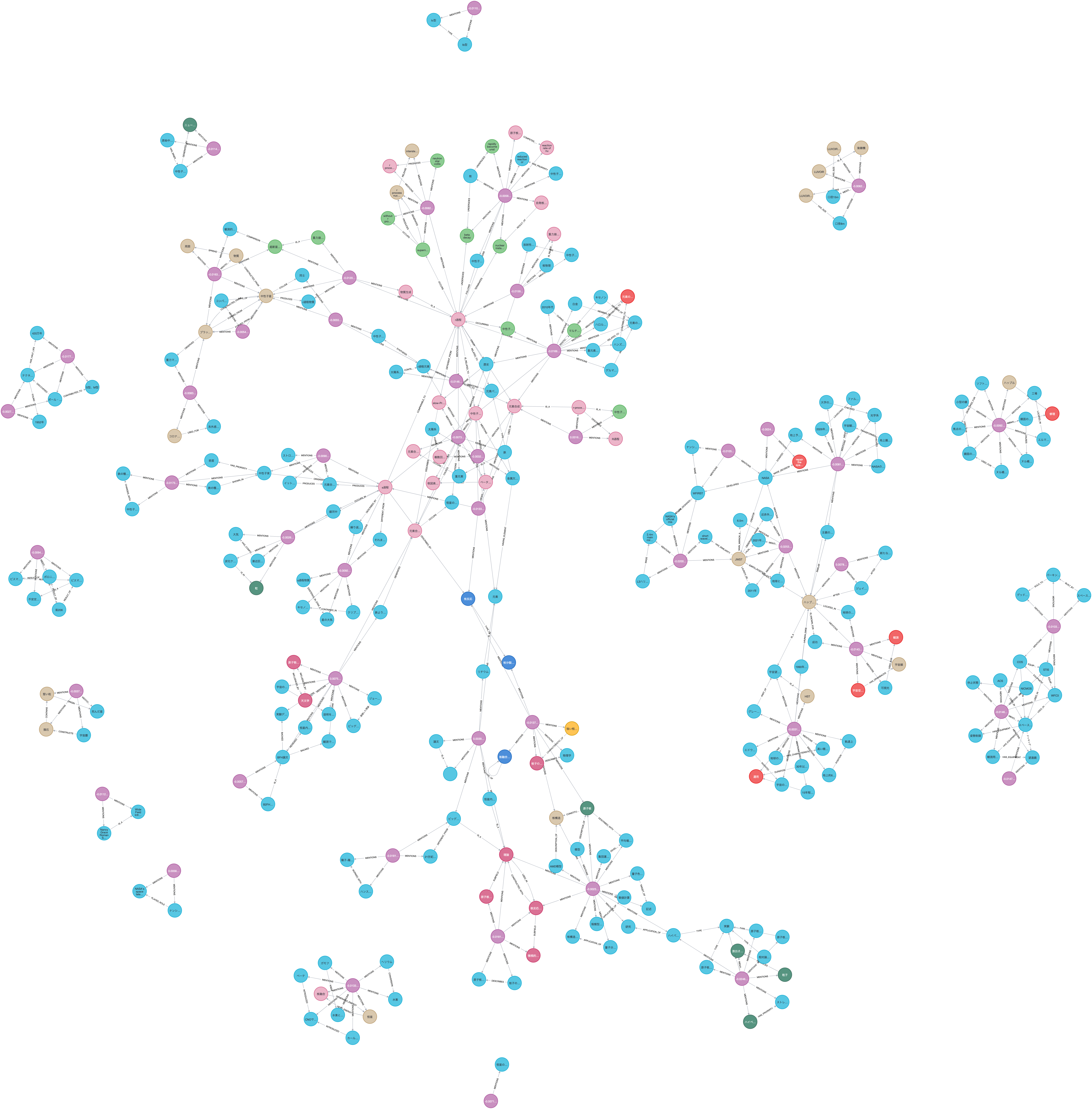

After creation, I connected to neo4j from a browser and downloaded the graph structure by

MATCH p=()-[]-() RETURN p

Building GraphRAG

Using the code in Source 1. as a reference, I built a RAG system using the knowledge graph I have built up to this point as external information.

Dealing with errors

The following error occurred at the point of invoke by passing a question. They are listed below in the order in which they were handled.

NotImplementedError in llm.with_structured_output(dict_schema)

Changed “llm = OllamaLLM(…)” to “llm = ChatOllama(…)”.

ResponseError: schroneko/gemma-2-2b-jpn-it does not support tools

This error occurred at the point where I passed the question and chain.invoke().

Changed “llm = ChatOllama(…, model=”schroneko/gemma-2-2b-jpn-it“, …)” to “llm = OllamaLLM(…, model =”llma3.3“, …)”.

TypeError: ‘NoneType’ object is not iterable

This error also occurred at the point where I passed the question and chain.invoke().

Searching the net, I found the article in source 2. The article stated that you should use a version of ollama lower than 0.4.0. The version of ollama I was using was 0.4.3. I thought it would be better to use the latest version (0.4.6) before downgrading, so I changed to 0.4.6, but the error could not be avoided. Then I changed to 0.3.3, but the error still could not be avoided.

I looked again at source 1. and found that the version of python was 3.11. The version of python in my environment was 3.10.12. Half-heartedly, I changed the python version of the JupyterLab container I had been using to 3.11. See yesterday’s this post for more information about that.

The changed python version is as follows.

# python --version

Python 3.11.10

ollama as LLM

As mentioned in the error response above, I was finally able to successfully execute the code in source 1. The code for the part where I tried various things is as follows. The rest of the code is the same as in Source 1.

# ollama(gemma-2-2b-jpn)をLLMとして設定

from langchain_ollama.llms import OllamaLLM

from langchain_ollama import ChatOllama

#llm = OllamaLLM(base_url = "http://192.168.11.4:11434", model="schroneko/gemma-2-2b-jpn-it", temperature=0, format="json")

#llm = ChatOllama(base_url = "http://192.168.11.4:11434", model="schroneko/gemma-2-2b-jpn-it", temperature=0, format="json")

#llm = OllamaLLM(base_url = "http://192.168.11.4:11434", model="llama3.2", temperature=0, format="json")

llm = ChatOllama(base_url = "http://192.168.11.4:11434", model="llama3.2", temperature=0, format="json")

Execution Result

print(chain.invoke("R過程について教えてください"))

{ "R過程" : "中性子星の衝突などの爆発的な現象によって起こる、元素合成における中性子を多くもつ鉄より重い元素のほぼ半分を合成する過程。迅速かつ連続的に中性子をニッケル56のような核種に取り込むことで起きる。" }

print(chain.invoke("ナンシー・グレース・ローマン宇宙望遠鏡"))

{"ナンシー・グレース・ローマン宇宙望遠鏡" : "2020年代半ばに打ち上げを目指し、日本を含む国際協力で進められているアメリカ航空宇宙局 (NASA) の広視野赤外線宇宙望遠鏡計画。主鏡の口径は2.4m、視野はハッブル宇宙望遠鏡よりも100倍広く、焦点距離は短くなる。太陽系外の惑星の撮影や、ダークエネルギーの存在確認に役立てることができると考えられる。}"}

print(chain.invoke("ナンシー・グレース・ローマン宇宙望遠鏡について教えて"))

{"ナンシー・グレース・ローマン宇宙望遠鏡" :"ナンシー・グレース・ローマン宇宙望遠鏡は、2020年代半ばの打ち上げを目指し、日本を含む国際協力で進められているアメリカ航空宇宙局 (NASA) の広視野赤外線宇宙望遠鏡計画。正式名称は「ナンシー・グレース・ローマン宇宙望遠鏡(Nancy Grace Roman Space Telescope)」です。"}

print(chain.invoke("B2FH論文"))

{"B2FH論文" : "元素の起源に関する記念碑的な論文。著者はマーガレット・バービッジ、ジェフリー・バービッジ、ウィリアム・ファウラー、フレッド・ホイルの4名で、1955年から1956年に執筆され、1957年にアメリカ物理学会の査読付き学術誌" }

print(chain.invoke("B2FH論文について教えてください"))

{

"B2FH論文" : {

"題名" : "Synthesis of the Elements in Stars",

"執筆期間" : 1955

, "執筆場所" : "ケンブリッジ大学とカリフォルニア工科大学",

"査読誌" : "Reviews of Modern Physics"

}

}

Conclusion

It took more than a week after preparing the text for the external information, but I were finally able to build GraphRAG.

Since it takes a reasonable amount of time to create the graph, it seems to be problematic when dealing with large scale external data.

As you can see from the above results, the answers seem to be a little bit disorganized when the questions are written in sentences. This is an area for improvement.