Introduction

I bought a MacBook Air (M4) in March. I wanted to run LLM locally, so I set the memory to the maximum 32GB, and finally was able to try local LLM with LM Studio, although I could not find much time for it because I started working in Kanda in April and I was busy with various events on weekends.

Sources

- Your local AI toolkit. - LM Studio official website.

- Local LLM Works on Mac! Let’s Use Large Language Models with ‘LM Studio’ - PC Watch article.

- Building a Local LLM Environment Using LM Studio - Zenn’s blog post.

Installation

Download and install

Download LM Studio for Mac (M series) from the official LM Studio website, by clicking “LM-Studio-0.3.16-8-arm64.dmg” and copying it to the application folder, according to the application installation style on Mac. Install.

Install language models

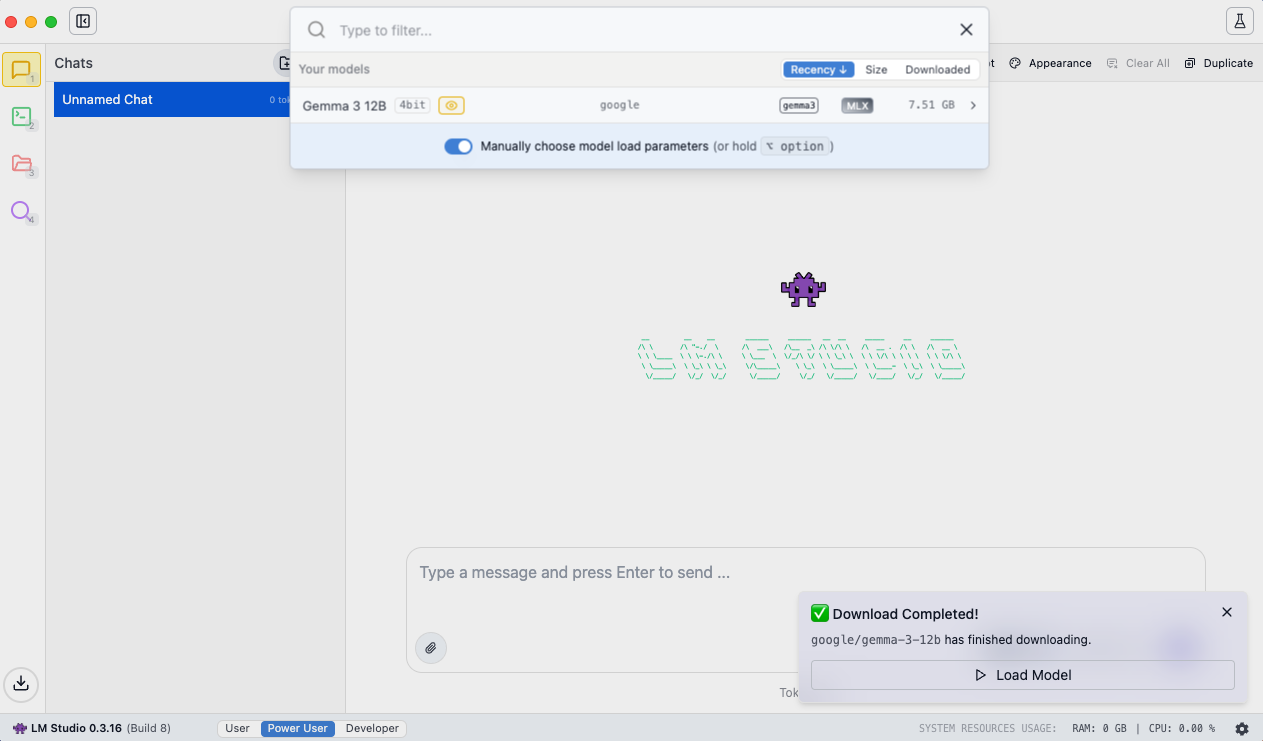

Start LM Studio and click “Select a model to load” at the top to download “gemma-3-12b”.

After the download is complete, the following screen will appear. Click “Load Model” at the bottom right to use gemma-3-12b.

Run the model

Start Chat

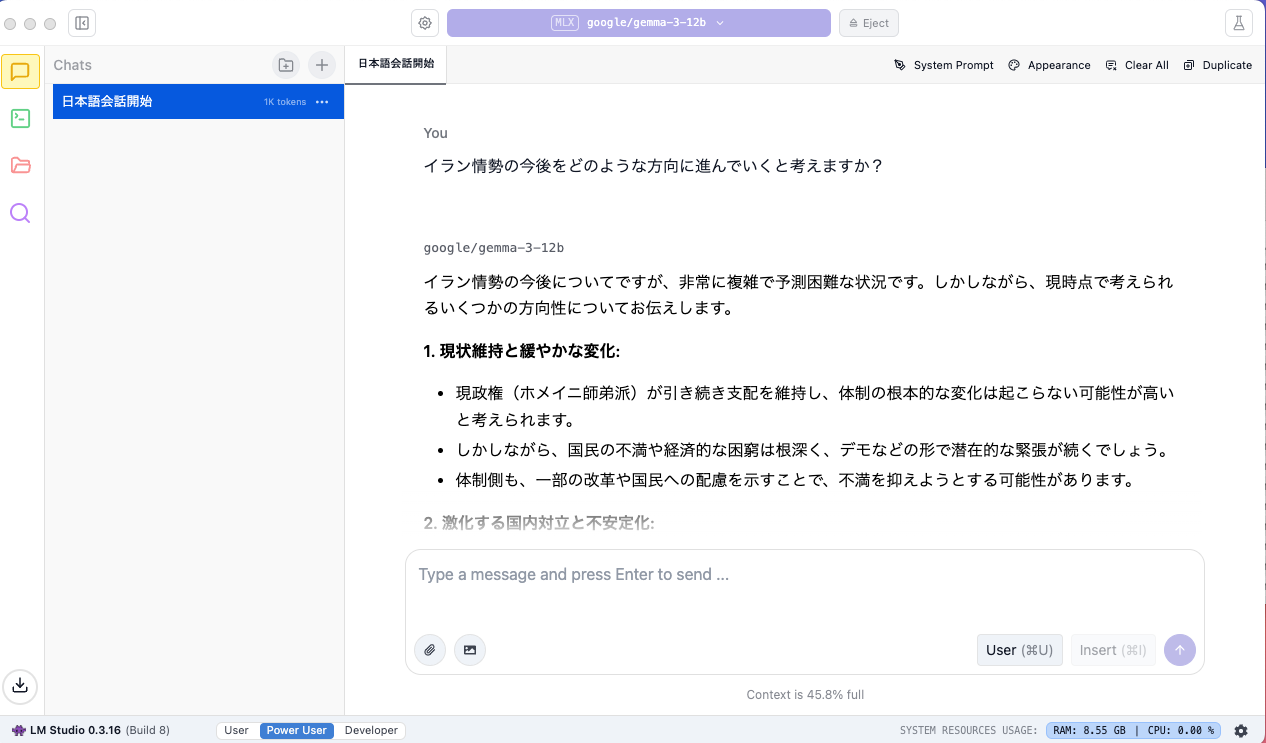

So far, gemma-3-12b is ready to be used and the conversation begins. The model you are using is displayed in the upper center of the screen. In this case, “google/gemma-3-12b” is displayed.

When I asked if it was possible to speak in Japanese, the response was, “Yes, it is possible to speak in Japanese.” I asked about three questions. I asked him three questions: “Can you tell me how to make delicious yakisoba noodles? What direction do you think the situation in Iran will take in the future? and “How do you predict the outcome of the Tokyo Metropolitan Assembly election?” Below are some of the responses regarding the situation in Iran. The overall responses were three items from each of the four perspectives and an overall outlook at the end of the survey, which was better than a poor commentator on a TV wide show.

About the future

The time from question to answer was faster than I expected. The time to answer was about the same as the time to answer on ChatGPT Plus, by my sense. It is great that local LLMs can get a reasonably satisfactory quality (speed and content of answers) on a laptop, MacBook Air.

I am planning to experience the AI agent on my MacBook Air in the future.