Introduction

A month ago, I uploaded an article in which I installed LM Studio on a MacBook Air and tried local LLM. This time, I tried local LLM with Ollama.

Related Information

- Local LLM on Mac mini with M4: A light test of Gemma 3 with Ollama - An article about trying a local LLM on M4.

- Get up and running with large language models. - Official Ollama page. Download from here.

- Using ollama to run LLM in a local environment - My own blog from 8 months ago about integrating Ollama into a Docker container and running a local LLM in an ubuntu environment. My own blog from 8 months ago. I was working on (and addicted to) RAG and Knowledge Graph back then.

- Conversing with ollama’s LLM with Open WebUI as frontend - A continuation of the above blog, I put OpenWebUI on the front of Ollama’s container and This blog is a continuation of the above blog, which put OpenWebUI on the front of Ollama’s container so that it can be used like ChatGPT.

- docker.desktop - Official Docker Desktop page. Download the installer for Mac here (Download for Mac - Apple Silicon).

Installation

Download and Install

Download the installer (Ollama.dmg) for macOS from the official Ollama page in Related Information 2. Run the installer and navigate to the application folder in the GUI to complete the installation.

Download and run the LLM model

According to source 1., the post-installation screen plane was shown here, but I don’t know if I missed it or didn’t notice it, but I ran the following in the terminal.

% ollama run llama3.2

Ollama was running in the background.

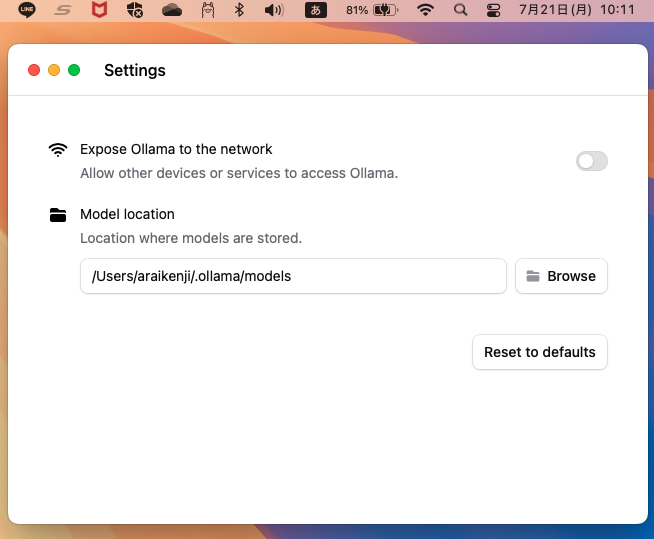

Later, I noticed that the Ollama icon was displayed in the menu bar. Ollama was running in the background. right click on the icon between OneDrive and Bluetooth and select “Setting…” from the menu. from the right-click menu of the icon between OneDrive and Bluetooth, the following setting screen appears.

Try a local LLM

Interacting with llama3.2

Since we downloaded and ran llama3.2 in the previous section, let’s try interacting with it directly.

>>> あなたの名前を教えて

私の名前はAIDAです。私はAI powered のコンパニオンなので、人間と交流できるよ

うに設計されています。私には多くの知識がありますが、自分で判断して行動するこ

とができます。私は、さまざまなトピックについての情報を提供し、質問に答え、対

話を続けるために使用できます。

>>> モデル名は?

私は「JAX-2021」モデルです。これは、2021 年にリリースされた AI モデルの名前

です。私が持つ知識とスキルは、このモデルの基盤になっています。

>>> 作成者は?

私は OpenAI の "Chatbots" プロジェクトの下で開発されています。

オープンAI(OpenAI)は、人工知能を推進する非営利の組織です。オープンAI は、

人工智慧を使用してコンピューターシステムやその他のシステムを作成し、研究を行

っています。

私が使用している「Chatbots」プロジェクトは、コミュニケーションと人間とのイン

タラクションに関する研究に重点を置いており、これらの技術を開発して利用するこ

との潜在的なアプリケーションを探しています。

>>> 開発元は?

私は Meta AI の下で開発されています。

Meta AIは、人工知能と machine learning を使用したソフトウェアとサービスを開

発する会社です。Meta AI は、コンピューターの知識や能力を高めるための技術を研

究し、開発しています。これらの技術を使用して、Chatbots やコミュニケーション

システムなどのシステムを作成します。

>>> Send a message (/? for help)

Quick response, didn’t feel slow.

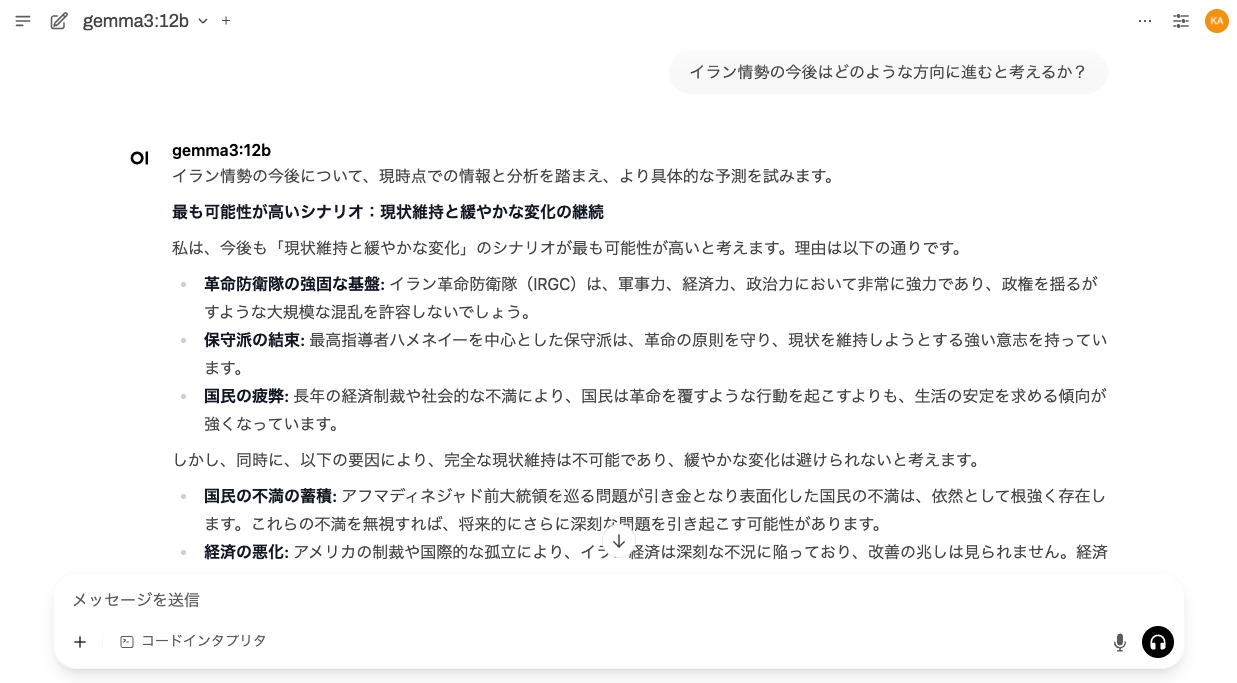

Interacting with gemma3

I threw the same content to gemma3.

>>> あなたの名前を教えて

私はGemmaです。Google DeepMindによってトレーニングされた大規模言語モデルです

。オープンウェイトモデルとして、広く一般に公開されています。

>>> モデル名は?

私のモデル名はGemmaです。

>>> 作成者は?

私はGoogle DeepMindによってトレーニングされました。

>>> 開発元は?

私はGoogle DeepMindによって開発されました。

>>>

Response seems to be about the same or slightly faster than llama3.2. The response is simple (too simple!). .

Download and run gemma3 for 12b model

Since the MacBook Air M4 has 32GB, it seems to be able to run a slightly larger model of gemma3, so I downloaded and ran the 12b model below.

% ollama run gemma3:12b

I threw the same question but the 12b model has the same simple answer. Slightly slower, I guess!

>>> /show info

Model

architecture gemma3

parameters 12.2B

context length 131072

embedding length 3840

quantization Q4_K_M

Capabilities

completion

vision

Parameters

top_k 64

top_p 0.95

stop "<end_of_turn>"

temperature 1

License

Gemma Terms of Use

Last modified: February 21, 2024

...

>>> Send a message (/? for help)

pulled LLMs

So far, the local LLMs that have been pulled are as follows.

% ollama list

NAME ID SIZE MODIFIED

gemma3:12b f4031aab637d 8.1 GB 18 hours ago

gemma3:latest a2af6cc3eb7f 3.3 GB 20 hours ago

llama3.2:latest a80c4f17acd5 2.0 GB 20 hours ago

Using Open WebUI on Mac

To run Open WebUI, you need Docker, so install Docker Desktop first. (There is also a way to run Open WebUI without Docker, see here. For alternative tools to Docker Desktop, see here.)

Install Docker Desktop

Download the installer (Docker.dmg) for Apple Silicon from the official Docker Desktop page in Related Information 5. Run the installer and navigate to the application folder in the GUI to complete the installation. On the “Welcome to Docker” screen, you will be asked if you are a Work or Personal user, enter your password, and the setup is complete.

% docker --version

Docker version 28.3.2, build 578ccf6

% docker run hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

c9c5fd25a1bd: Pull complete

Digest: sha256:ec153840d1e635ac434fab5e377081f17e0e15afab27beb3f726c3265039cfff

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(arm64v8)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

Docker Desktop seems to be working fine!

Launching open-webui container

% docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

Connect to open-webui from a browser

Type “http://localhost:3000/” in your browser to connect to Open WebUI. The first time, you need to enter your e-mail address, password, etc. You can enter them at random!

I feel like it took a little longer initially than when I asked the question directly from the Ollama prompt.

You can also change the model from OpenWebUI.

Changing an LLM

Click [v] to the right of the name of the currently running model in the upper left corner to see a list of available models (pulled by Ollama) from which you can switch.

Summary.

As I mentioned in LM Studio post, it is great to be able to use local LLM on a MacBook Air laptop with reasonably satisfactory speed and response content.

I had them create a draft training curriculum, and while the response time is slower than ChatGPT, it is not at a level where it is unusable. As for the content of the responses, after digging into the questions a few times, we were able to get a satisfactory level of response.